Table of Contents Hide

Database Access & DevOps – The Evolution, Challenges & Solutions

4 MIN. READING

4 MIN. READING

Database Virtualization: a Better Approach to Test Data Management (TDM)

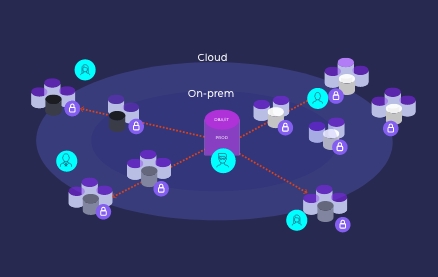

To meet the database copy needs of DevOps, QA, and agile development teams, a holistic approach to test data management (TDM) is required. It’s critical to balance the need for speedy test database provisioning with data privacy compliance and storage costs – which can get out of control if the database copy volume is high.

Modern TDM platforms that incorporate technologies like database virtualization and AI-based automated masking, all wrapped in a user-friendly UI and API are ideal. Furthermore, the ideal TDM platform provides a self-service process that eliminates the reliance on DBAs and other IT admins.

Using virtual databases allows for quick delivery of database copies while consuming negligible storage, with automated provisioning increasing DevOps release velocity. More copies of environments can be created quickly, and more functional testing can be done, reducing the volume of defects making it into production.

Furthermore, virtualized databases dramatically reduce cloud storage costs, as they are stored in one place and accessible in many others. Virtualization also acts as a data container, allowing it to be used across multiple cloud environments wherever storage is less expensive.

Teams should have an automated process in place to ensure that specific databases are masked, thus keeping the company data secure and privacy compliant. Automated, on-the-fly masking should be integrated directly into the build or refresh of test databases to prevent exposure of sensitive data.

The Future of Database Access in DevOps

As companies move towards the use of cloud environments and more distributed systems, the need for efficient and secure test data management solutions will only continue to grow. Companies must invest in TDM platforms that can provide reliable and efficient delivery of test data, while also ensuring data privacy compliance and minimizing storage costs. These TDM solutions will accelerate the ability to create more accurate and representative test data environments, leading to better testing outcomes, fewer software flaws, and faster time to market.